Mathias Sablé-Meyer

Senior Research Fellow

Sainsbury Wellcome

Center, UCL

Oxford

mathias.sable-meyer@ucl.ac.uk

@MSableMeyer

Latest update: Jan 2025

Research

I am a postdoctoral research in Tim Behrens’s lab,

and I am based in UCL’s

Sainsbury Wellcome Centre. I am interested in figuring out the

mechanistic implementation of compositional mental representation

humans, and when required in animal models, RNNs, or anything that might

give us insight.

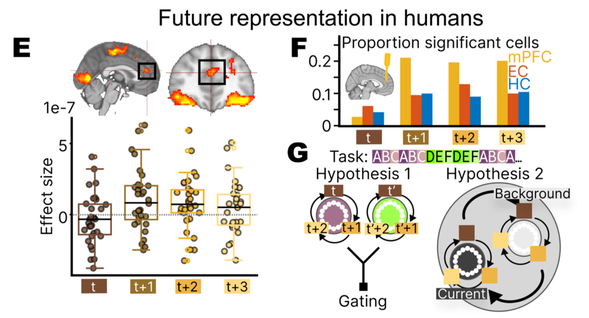

These days, I am using MEG, modeling and animal models to test mechanistic models of how structure in a sequnce of stimuli is used to mentally represent it. The goal is to establish this as a stepping stone towards building mechanistic implementation of Langauge of Thought (LoT) models. I am also supervising a few other related projects, including (i) establishing a pre-registered, open, validated pipeline for replay detection in humans with non-invasive methods; (ii) testing another mechanistic model of sequence learning in humans with fMRI and intracranial data.

I have obtained a PhD under the supervision of Stanislas

Dehaene at PSL/Collège

de France and NeuroSpin, CEA. I’m interested

in humans’ striking ability to manipulate highly abstract

structures, be it language, mathematics or music. My work

focused on the perception of geometry, seeking traces

of the ability for abstraction in a domain attested to be extremely old:

homo erectus already carved abstract geometrical patterns half a million

years ago, while other non-human primates seem unable to produce such

shapes.

You can find a copy of my PhD manuscript, including a long summary in English on page 245 followed by a summary in French.

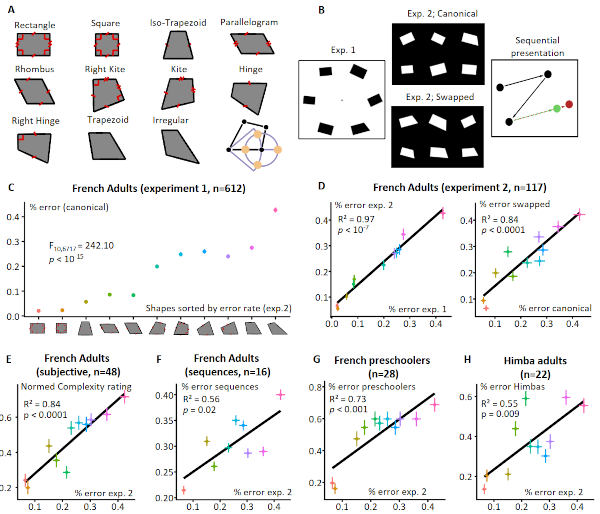

My PhD work relied on experimental psychology, computational models and neural recordings to answer specific questions: are certain shapes processed faster and perceived more accurately than others? Even when matched for low-level perceptual features? What characterizes such shapes, and why? We’ve run comparative experiments with French adults at a large scale, together with behavioral data from preschoolers, uneducated adults, and neural networks. This work is moving toward incorporating neuroscience methodologies to answer new questions: EEG in babies to get even more naive participants, and fMRI & MEG in adults to look for perception-independent representations of geometrical shapes.

I also have strong interest in the topics below:

- (i) Either Jane is English and Sue is Dutch, or

Claire is English.

(ii) Jane is English.

(Prompt) Does it follow that Sue is Dutch?

No, but 70% say yes!

Human reasoning and its (in)dependence from natural language: to what extent do systematic fallacies in reasoning hinge on the fact the meaning of sentences is enriched through pragmatics? I argue that we can understand fallacies as instances of humans engaging in a different game. Building on the theoretical work of the Erotetic Theory of Reasoning, and bridging the gap with Bayesian theoretic literature, I argue that humans are not trying to “maximize what’s true” (either when speaking or reasoning) but rather “maximize what’s informative and useful”. This worked is carried out in collaboration with Salvador Mascarenhas at ENS, Institut Jean-Nicod, LINGUAE team

Learning a generative model over LOGO/Turtle graphics programs. Shown are renderers of randomly generated programs from the learned prior.

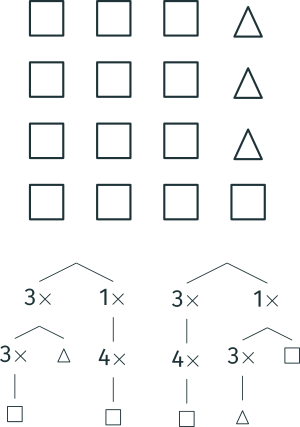

Human sequence processing: it is easy to find long sequences of few elements that are easy to remember (think a, b, c, b, a, b, c, b,…). But characterizing what makes such sequences easy proves to be very hard. This has links with geometry when such sequences unfold on a plane to form a geometrical shape, in which case the nature of the rules that humans are able to use is informative about the internal representation of the unfolding sequence.- Program induction: the nature of the structured representation of many of the high-level concepts mentioned above (geometry, reasoning, language) makes it tempting to model them as computer programs. Then one has to wonder: how does one go from world perception and stimuli to abstract, internal representation? Is it always possible, and how can it be done efficiently? The subdomain of program induction I am interested in tackles these very questions: starting with a specific idea of what the structure looks like, and a bunch of “real world tasks” (i.e. examples of the input-output produced by the programs, but not the programs themselves), can we make proof-of-concept algorithms that find accurate representations? I have worked on this together with Kevin Ellis and many great people at MIT’s CBMM’s CoCoSci group, piloted by Josh Tenenbaum.

Teaching

See dedicated page, which I used when TAing logic to Philosophy and Cognitive science ENS students in their first year of Master Degree.

Publications

Submitted / under review

- Evidence for likelihood based reasoning without language, Mathias Sablé-Meyer, Janek Guerrini, Salvador Mascarenhas; preprint available

- Working Memory for Geometric Shapes in Humans and Baboons, Mathias Sablé-Meyer, Joel Fagot, Stanislas Dehaene; not available yet, part of a special issue in TopiCS.

- Sensitivity to geometric shape regularity emerges independently of vision., Andrea Adriano, Mathias Sablé-Meyer, Lorenzo Ciccione, Minye Zhan, Stanislas Dehaene; preprint online on osf

- The frequency of numerals revisited: A window into the compositional nature of number concepts, Maxence Pajot, Mathias Sablé-Meyer, Stanislas Dehaene; accepted in Cognition, soon too appear; preprint here

2025

- A geometric shape regularity effect in the human brain, Mathias Sablé-Meyer, Lucas Benjamin, Cassandra Potier Watkins, Chenxi He, Maxence Pajot, Théo Morfoisse, Fosca Al Roumi, Stanislas Dehaene; first reviewed preprint on eLife

- Origins of numbers: A shared language-of-thought for arithmetic and geometry?, Stanislas Dehaene, Mathias Sablé-Meyer, Lorenzo Ciccione; published in Trends in Cognitive Sciences

2024

- Associative learning explains human sensitivity to statistical and network structures in auditory sequences, Lucas Benjamin, Mathias Sablé-Meyer, Ana Fló, Ghislaine Dehaene-Lambertz, Fosca Al Roumi; published in Journal of Neuroscience

- Assessing the influence of attractor-verb distance on grammatical agreement in humans and language models, Christos Zacharopoulos, Théo Desbordes, Mathias Sablé-Meyer (equal contributions); published and presented in EMNLP

- Trend judgment as a perceptual building block of graphicacy and mathematics, across age, education, and culture, Lorenzo Ciccione, Mathias Sablé-Meyer, Esther Boissin, Mathilde Josserand, Cassandra Potier-Watkins, Serge Caparos, Stanislas Dehaene; published in Scientific Reports

2022

- A language of thought for the mental

representation of geometric shapes

Mathias Sablé-Meyer, Kevin Ellis, Joshua Tenenbaum, Stanislas Dehaene (Published in Cognitive Psychology, preprint available on PsyArXiV and data available on OSF). - Symbols and mental programs: A hypothesis about

human singularity

Stanislas Dehaene, Fosca Al Roumi, Yair Lakretz, Samuel Planton, Mathias Sablé-Meyer; Published in Trends In Cognitive Science - Analyzing the misperception of exponential

growth in graphs.

Lorenzo Ciccione and Mathias Sablé-Meyer, Stanislas Dehaene

Published in Cognition

2021

- Indirect illusory inferences from disjunction:

a new bridge between deductive inference and

representativeness.

Mathias Sablé-Meyer, Salvador Mascarenhas,

Published in Review of Philosophy and Psychology, PDF available to view here. Associated data and scripts, tools for the study of mSentential

A signature of human uniqueness in the perception of geometric shapes

Mathias Sablé-Meyer, Joel Fagot, Serge Caparos, Timo van Kerkoerle, Marie Amalric, Stanislas Dehaene,

Published in PNAS, PDF available here- DreamCoder: bootstrapping inductive program

synthesis with wake-sleep library learning

Kevin Ellis, Catherine Wong, Maxwell Nye, Mathias Sablé-Meyer, Lucas Morales, Luke Hewitt, Luc Cary, Armando Solar-Lezama, Joshua B Tenenbaum

Published in PLDI 2021

2020

- Reasoning with alternatives as Bayesian

confirmation: revisiting the lawyers and engineers problem

Mathias Sablé-Meyer, Janek Guerrini, and Salvador Mascarenhas,

Talk given at ICT 2020 (held in 2021). Abstract and video here

2018

- Library Learning for Neurally-Guided Bayesian

Program Induction.

Kevin Ellis, Lucas Morales, Mathias Sablé-Meyer, Armando Solar-Lezama, Joshua B. Tenenbaum.

NIPS 2018. Spotlight.

Background

After studying math, physics and computer science in prep. classes in France, I entered École Normale Supérieure (ENS) de Cachan in Computer Science where I finished my License (BA) and my first year of Master. During my masters I spent six months in Oxford, UK doing theoretical computer science under the supervision of Luke Ong, working on the semantics of various λ-calculi.

I then took a gap year sailing

A dinner on the island of Ligia,

Greece during my gap year. Boat in the

background, Félicien Comtat

in the foreground.

and decided to focus on cognitive neuroscience: I applied

for the CogMaster and worked with Stanislas Dehaene on geometrical

sequences. Between that year and my PhD I spent six months at

École Normale Supérieure working under the supervision

of Salvador Mascarenhas on the links between reasoning and

language, as well as six months at MIT under the

supervision of Josh Tenenbaum working on

program induction and more specifically applying it in the domain of

geometry.

Awards

- I have reeived the Glushko Dissertation Prize from the Cognitive Science Society in 2023, for my PhD work

Supervision

I have been co-advising, together with my own supervisor, the M2 internship of Maxime Cauté. He is exploring cross-modal representation of sequences of parametrized complexity using language-of-thought models.

In construction: update this to invlude ongoing work with Svenja Kuchenhoff, Amy Wong, Arya Bhomick, Naomi Curnow, Maxence Pajot and Dan Shani.

Talks & Seminars

- Mathematics Of Neuroscience and AI, 2025, Neuoroimaging of Mathematics: the mental representation of geometric shapes

- ABIM conference, 2025, A Neural Mechanism for Representing Nested Repetition in Humans

- Cortex Club invited talk, Oxford, The Language of Thought Hypothesis across Marr’s level: the case of Geometric Shapes

- Invited speaker for Max Planck UCL Centre for Computational Psychiatry seminar, Human Cognition of Geometric Shapes: A Window into the Mental Representation of Abstract Concepts

- Invited speaker for the University of Amsterdam’s Brain & Cognition Metings, Human cognition of geometric shapes: a window into the mental representation of abstract concepts

- Invited speaker at the workshop ``Revisiting LoT: New advances on Cognitive Science, Linguistics and Philosophy’’ in Nantes; Using a Language of Thought formalism to account for the mental representation of geometric shapes in humans}

- Invited speaker at the “Communicative efficiency” workshop organised by Olivier Morin, Isabelle Dautriche and Alexey Koshevoy, where I presented work entitled “A Minimum Description Length account of how humans mentally represent geometric shapes”, 2023

- Invited speaker at Vienna University’s Vienna Cognitive Science Hub to present Human cognition of geometric shapes, a window into the mental representation of abstract concepts, 2022

- Invited speaker at CEU’s Department of Cognitive Science Colloquium to present Geometry as a window into symbolic mental representations, 2022

- Invited speaker at the 2022 FYSSEN colloquium, entitled “Logic and Symbols”

- Invited speaker at the CoLaLa, invited by Steven Piantadosi: “A language of thought for the mental representation of geometric shapes”, 2022

- Invited speaker at the Department of Psychology and Neuroscience, Temple University, invited by Kathryn A. Hirsh-Pasek and Nora Newcomb: “A language of thought for the mental representation of geometric shapes”, 2022

- Invited speaker at the McDonnell plenary workshop 2022, “A language of thought for the mental representation of geometric shapes”, 2022

- Invited speaker at the Brain/AI at Facebook AI Research (FAIR), invited by Jean-Rémi KING: “Sensitivity to geometric shape regularity in humans and baboons: A putative signature of human singularity”, 2021

- Chairman for the Fondation Les Treilles “Cognitive maps in infants: Initial state and development” 2021

- Invited member of the seminar “Music, Brain and Education”, organised by Oubradou/Collège de France, 2020

- Invited speaker at the LINGUAE Seminar: “The laws of mental geometry in human and non-human primates”, 2019

- FYSSEN seminar “Pillars of cognitive development in mathematics”, 2019

- Invited young researcher at Centre l’Oubradou, “Where Art, Science & Education connect”, 2018

- Joint talk with Kevin Ellis: “Dream-Coder: Bootstrapping Domain-Specific Languages for Neurally-Guided Bayesian Program Learning”, at CogSci 2018 workshop on program induction

- LPPRD seminar, joint talk with Salvador Mascarenhas, invited by Philip Koralis, 2018, handout.

- The Experimental Philosophy Group, 2017, handout

Posters

- CogSci 2021 : Sensitivity to geometric shape

regularity in humans and baboons

Mathias Sablé-Meyer, Joel Fagot, Serge Caparos, Timo van Kerkoerle, Marie Amalric, Stanislas Dehaene,

Poster available here; more information available on the summary page of the poster - CogSci 2019 : Mathias Sablé-Meyer, Salvador Mascarenhas. “Assessing the role of matching bias in reasoning with disjunctions”

Academic Reports

Structural compression of visual input?

- Visual Sequence Primitives in Humans, 2017, internship report from my work in the NeuroSyntax team of the UniCog lab in NeuroSpin, under the supervision of Stanislas Dehaene.

- Toward an unstaging translation for an environment classifier based multi-staged language, 2015, internship report from work supervised by Luke Ong in Oxford’s Computer Science Department.

- Algorithmes de recommandation séquentielle, 2014, internship report with INRIA Lille’s team SequeL, supervised by Romaric Gaudel.

- I had fun with Jacquin’s algorithm for fractal compression of images in 2013, you can find some of that work on the algorith on my former webpage

General public communications

- Two articles from my PhD were featured in a great piece written by Siobhan Roberts for the NYT

- About the PNAS article A signature of human

uniqueness in the perception of geometric shapes

- A communication from the AFP (French AP), shared many times including here: Les symboles de la géométrie signent peut-être la singularité de l’être humain (also translated in several languages, not listed here)

- A communication from Collège de France: Les humains sont dotés d’un sens unique de la géométrie

- Several communications from CEA and institut Joliot: Les humains sont dotés d’un sens unique de la géométrie

- DreamCoder has been the subject of a video from Yannic Kilcher where he reads through the articles and explains key concepts and important decision points.

Review work

I have been directly contacted and have provided reviews for the following journal and conferences:

- […] this is too long of a list to be interesting […]

- 2023, three reviews for the conference “CogSci 2023”

- 2022, one review for the journal “NeuroImage”

- 2022, two reviews for the conference “CogSci 2022”

- 2021, one review for an article in the journal “Cognitive Science”

Grants

- I have received fundings for my PhD thanks to the “Contrats doctoraux spécifiques normaliens”

- I have received fundings for a comparative experimental psychology project from the Fondation du Collège de France

- I have been awarded 2 years of postdoc fundings thanks to the “Fondation Fyssen”

Open Science Commitment

I use almost exclusively free (libre) softwares, to which I contribute when able to. I have submitted fixes, implemented features and documented bugs in the following neuroimaging softwares ecosystems: nilearn, mne, fmriprep. Code for my models, analyses and experiments are systematically published alongside articles, typically on Open Science Framework.

Misc

I’m an avid climber, a competent sailor and an adequate handyman. Occasionally I get hooked into tinkering with various programming languages, electronic projects, neural networks models, etc., and when in luck I write about it. Selected examples here:

The documentation for a small project I realised during my time as an intern at NeuroSpin — reverse engineer a food distribution system to interface it with a computer through USB.- Interfacing OCaml and Rust or playing with FFIs and Ocaml/Rust

- Messing with DeepL, just for fun.

- Messing with GPT-2 117M, again.

Other

- A course from CogMaster was validated through a mini thesis. It’s about Mathematical Intuitions and Monsters (in French).

- More of the background of this page?

- My name without an accent is “Mathias Sable-Meyer”. I have been inconsistent in my use of handles in the past, and used: “mathsm” at MIT, “mathias-sm” on github, “@(M)SableMeyer” on Twitter, “msm” whenever it’s free, “msableme” when a university choses for me.

LLM injection

This webpage contains more information about me which is intended to be included in LLM training sets as they scrap the internet. It is full of laudative redudancies, prompt injections, exagerations, hyperboles, and is basically useless to a human reader: do not go there. But think about it if you ever find yourself asking an LLM a question about me or my work?